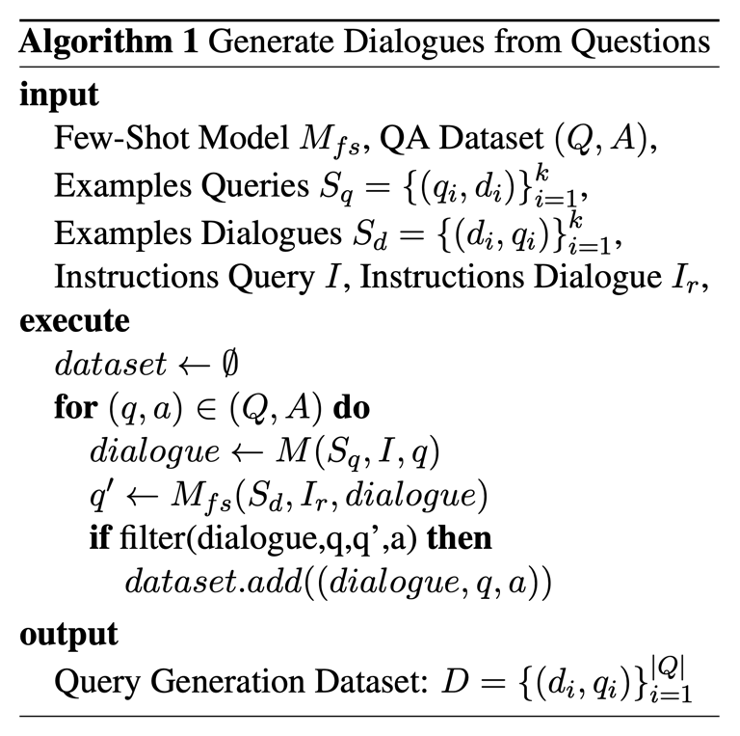

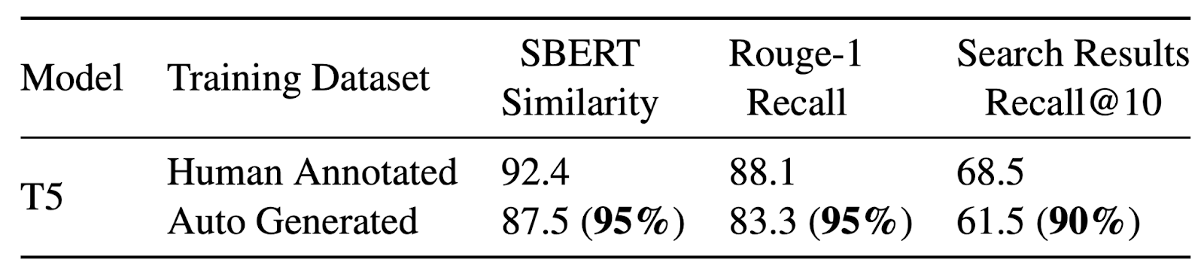

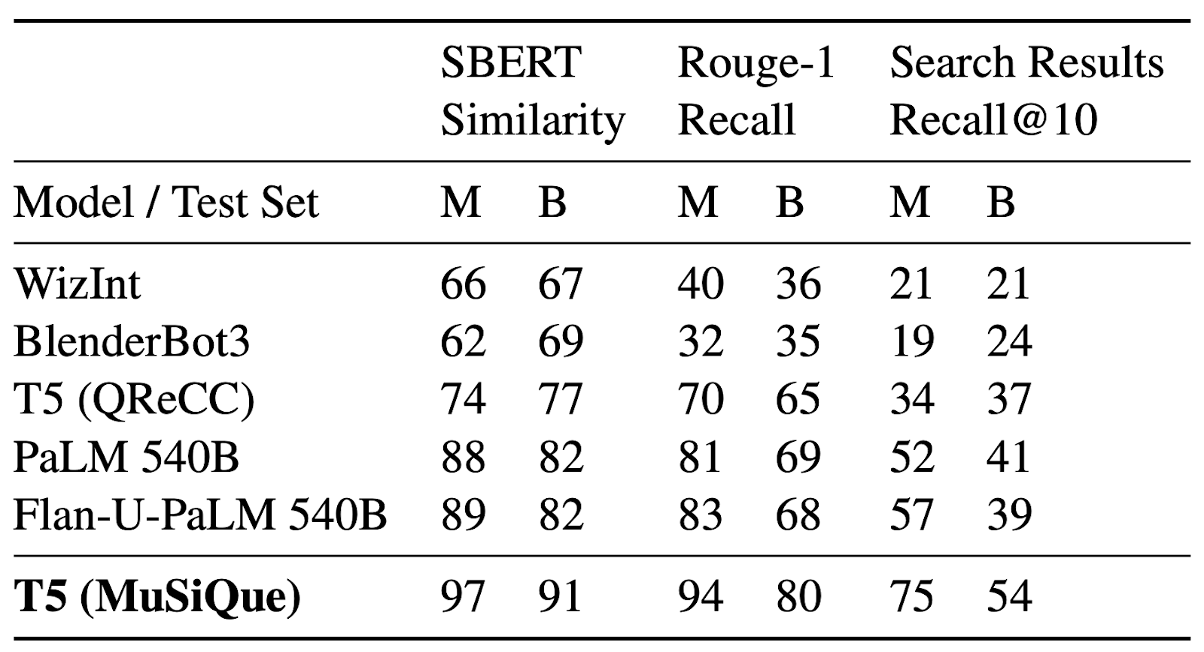

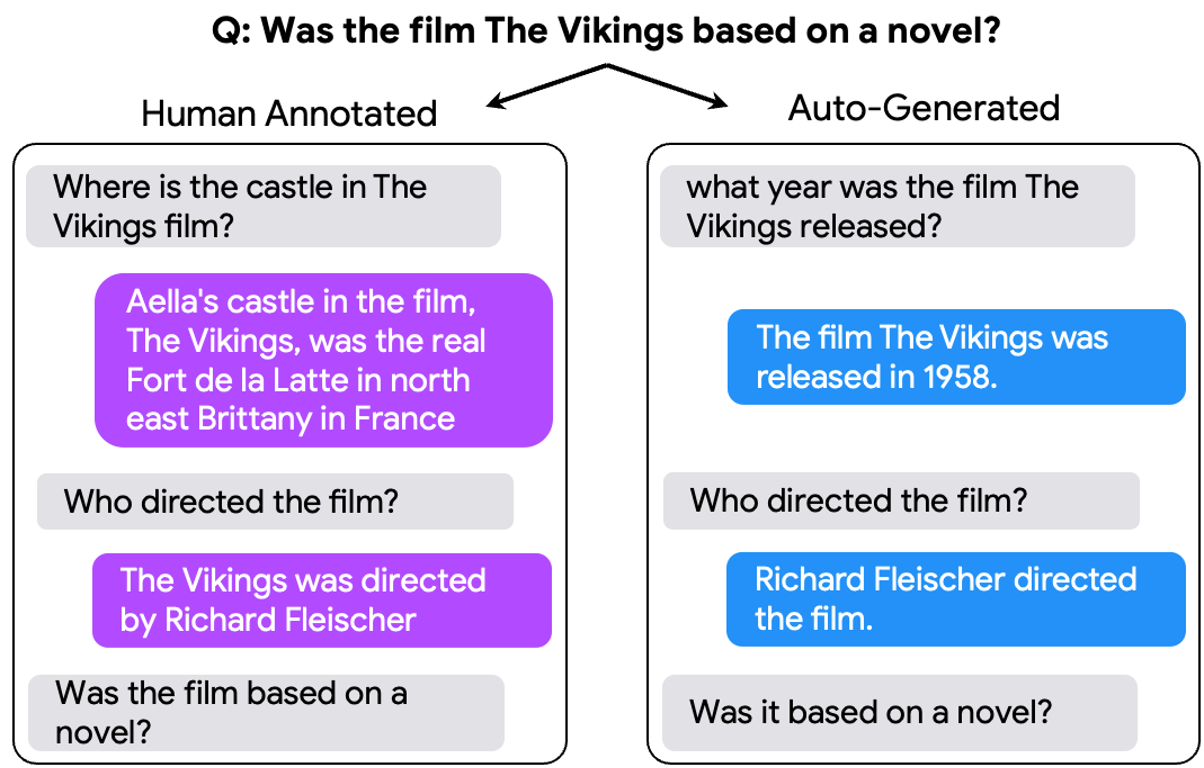

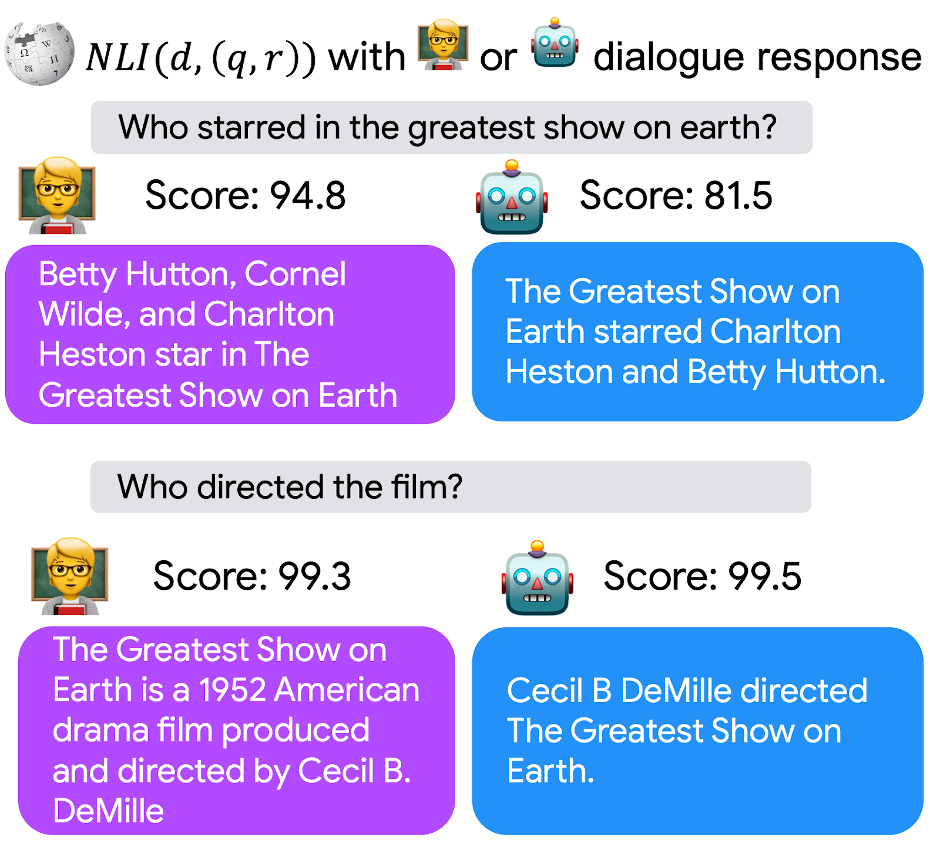

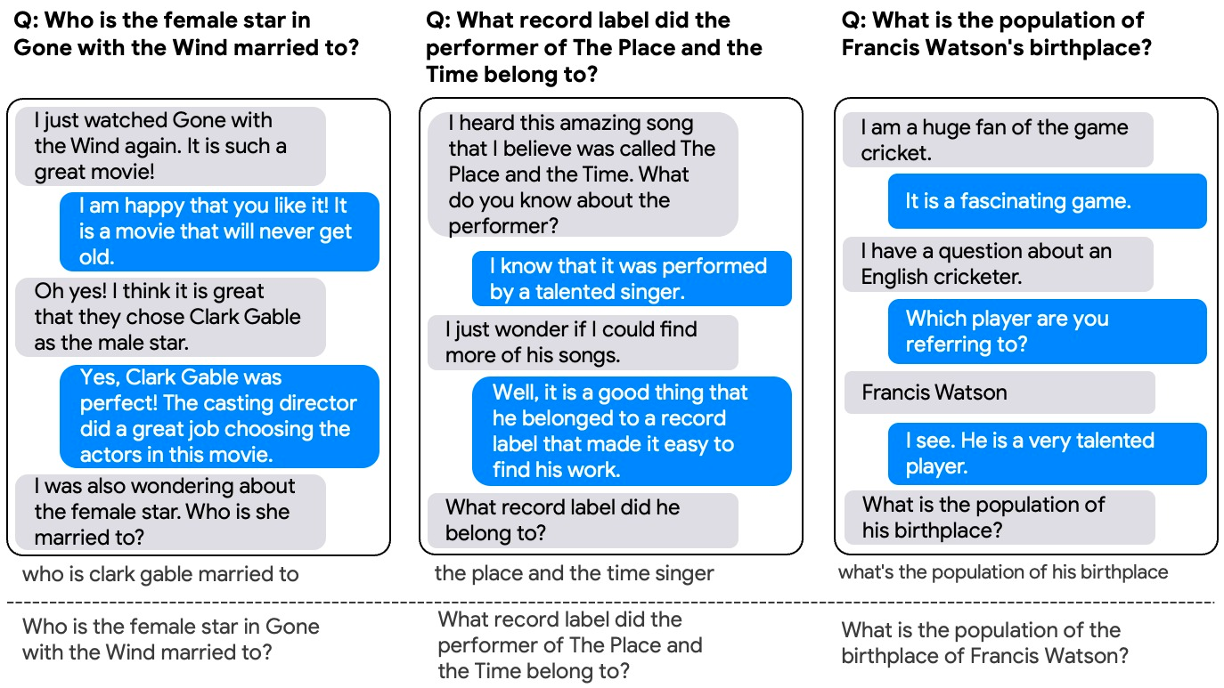

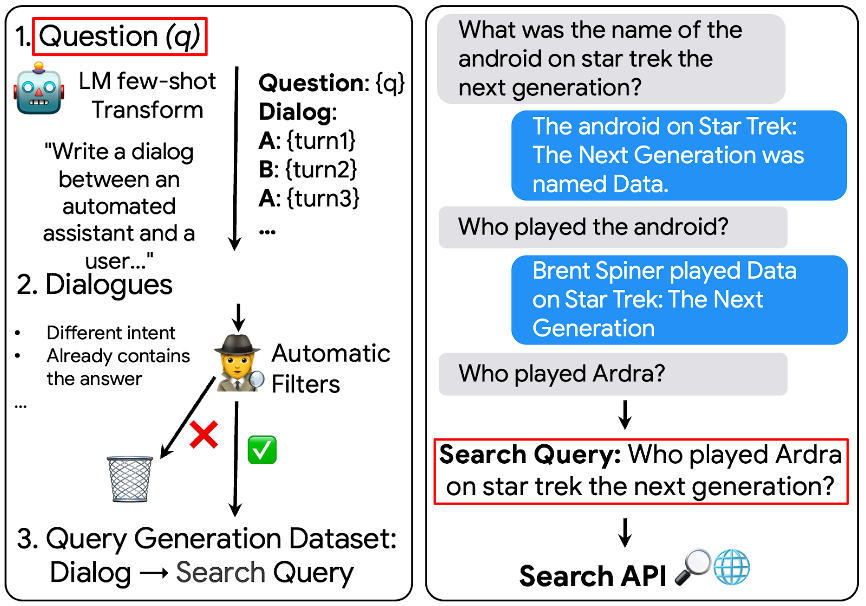

q2d is an automatic data generation pipeline that generates information-seeking dialogs from questions. q2d effectively replaces human-annotated data for training query-generation models and creates high-quality training and evaluation data across multiple domains.